Introduction

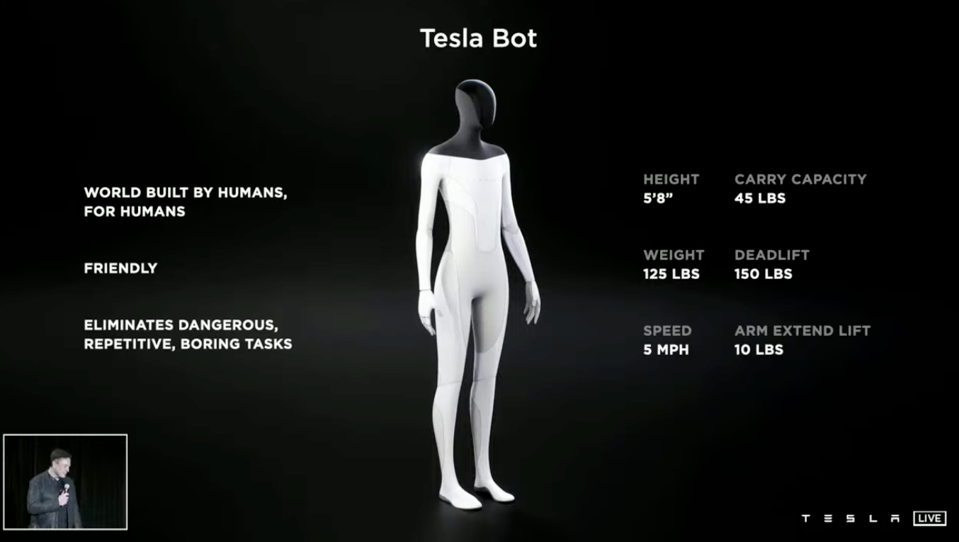

The Tesla Bot, also known as Optimus, represents a groundbreaking leap in robotics and artificial intelligence. Designed as a general-purpose, bi-pedal, autonomous humanoid robot, the Tesla Bot aims to tackle tasks that are unsafe, repetitive, or monotonous for humans. By leveraging Tesla’s expertise in software stacks, deep learning, computer vision, motion planning, and more, the Tesla Bot is poised to transform the way we approach automation. This article explores the vision behind the Tesla Bot, the technologies driving its development, and the engineering challenges being addressed to bring this ambitious project to life.

1. The Vision Behind Tesla Bot

The Tesla Bot aims to address several key areas:

- Task Automation: The robot is designed to perform repetitive and mundane tasks that are currently handled by humans, such as factory work, inventory management, and even household chores.

- Safety: By taking on dangerous tasks, the Tesla Bot can reduce human exposure to hazardous environments, improving overall safety in workplaces and homes.

- Productivity: The automation of routine tasks can enhance productivity across various sectors, from manufacturing to domestic life.

Tesla’s vision for the Tesla Bot includes creating a versatile and adaptable robot capable of operating in a wide range of environments. The goal is to develop a humanoid robot that not only performs tasks efficiently but also interacts seamlessly with the physical world.

2. Core Technologies

2.1. Deep Learning

- Purpose: Deep learning algorithms are used to enable the Tesla Bot to understand and interpret complex data from its environment. This includes object recognition, speech processing, and decision-making.

- Applications: Deep learning helps the robot learn from experience and adapt to new situations, making it more effective in performing a variety of tasks.

2.2. Computer Vision

- Purpose: Computer vision enables the Tesla Bot to perceive and interpret visual information from its surroundings. This involves detecting and identifying objects, navigating through spaces, and recognizing faces or gestures.

- Applications: With advanced computer vision, the robot can navigate safely, interact with objects, and respond to visual cues, enhancing its ability to perform tasks autonomously.

2.3. Motion Planning and Controls

- Purpose: Motion planning involves creating algorithms that allow the robot to move efficiently and safely through its environment. Controls ensure precise execution of these movements.

- Applications: These technologies enable the robot to walk, balance, and manipulate objects with accuracy, ensuring smooth and coordinated actions.

2.4. Mechanical Engineering

- Purpose: Mechanical engineering focuses on designing and constructing the physical components of the Tesla Bot, including its joints, limbs, and actuators.

- Applications: Robust mechanical design ensures the robot’s durability, stability, and capability to perform a wide range of physical tasks.

2.5. General Software Engineering

- Purpose: General software engineering encompasses the development of the software stack that integrates various subsystems of the robot, including its operating system, application software, and user interfaces.

- Applications: This software enables the robot to perform its functions, interact with users, and integrate with other systems and devices.

3. Engineering Challenges

3.1. Balance and Stability

- Challenge: Achieving stable and balanced bipedal locomotion is one of the most complex aspects of humanoid robotics. The robot must maintain balance while walking, turning, and performing various tasks.

- Solutions: Advanced control algorithms, real-time sensor feedback, and sophisticated motion planning techniques are used to address these challenges.

3.2. Navigation and Environment Interaction

- Challenge: Navigating complex environments and interacting with dynamic objects requires precise perception and decision-making capabilities.

- Solutions: Combining computer vision with deep learning and motion planning allows the robot to map its environment, avoid obstacles, and execute tasks effectively.

3.3. Integration of Systems

- Challenge: Integrating various subsystems, including hardware components, sensors, and software algorithms, presents significant engineering complexity.

- Solutions: A modular design approach, along with robust testing and simulation, ensures that all components work together seamlessly.

3.4. Safety and Reliability

- Challenge: Ensuring the safety and reliability of the Tesla Bot in various operating conditions is crucial. The robot must perform tasks without causing harm to itself or its environment.

- Solutions: Safety features, redundancy in critical systems, and rigorous testing protocols are implemented to guarantee reliable operation and prevent accidents.

4. Applications and Use Cases

4.1. Industrial Automation

- Application: In manufacturing and logistics, the Tesla Bot can handle repetitive tasks such as assembly, material handling, and quality inspection.

- Impact: Increased efficiency, reduced labor costs, and improved safety in hazardous environments.

4.2. Household Assistance

- Application: The robot can assist with household chores such as cleaning, cooking, and organizing.

- Impact: Enhanced convenience and quality of life for users, particularly for individuals with mobility challenges or busy lifestyles.

4.3. Healthcare and Support

- Application: In healthcare settings, the Tesla Bot can provide support for patients, assist with mobility, and help with routine medical tasks.

- Impact: Improved patient care, reduced strain on healthcare professionals, and enhanced support for elderly or disabled individuals.

4.4. Service Industry

- Application: The robot can be employed in service roles such as customer support, delivery, and concierge services.

- Impact: Enhanced customer experiences, operational efficiency, and new service possibilities in various sectors.

5. Hiring and Talent Acquisition

5.1. Deep Learning Engineers

- Role: Develop and implement advanced algorithms for perception, learning, and decision-making.

- Skills: Expertise in neural networks, machine learning frameworks, and data analysis.

5.2. Computer Vision Specialists

- Role: Create and refine vision systems for object detection, recognition, and tracking.

- Skills: Proficiency in image processing, feature extraction, and visual perception.

5.3. Motion Planning Experts

- Role: Design algorithms for autonomous movement, including navigation, path planning, and obstacle avoidance.

- Skills: Knowledge of kinematics, dynamics, and control systems.

5.4. Controls Engineers

- Role: Develop and optimize control systems for precise movement and balance.

- Skills: Experience with control theory, robotics, and real-time systems.

5.5. Mechanical Engineers

- Role: Design and build the physical components of the robot, including actuators, joints, and structures.

- Skills: Expertise in mechanical design, robotics, and materials science.

5.6. General Software Engineers

- Role: Develop the software stack that integrates hardware and algorithms, including operating systems and user interfaces.

- Skills: Proficiency in software development, system integration, and user experience design.